Of course, Generative AI is everywhere. Literally, everyone is confronted with generated content. Either, because we are generating texts, images, audio, or even video by yourself, or because others are generating content we are consuming. In various discussions I discover one general problem: the awareness about what GenAI really „is“ and what it is not. In this article, i will provide some public understandable insights and highlight the demands on education and awareness in the area of Generative AI.

For decades, computer science has developed and distributed a common understanding of what computers are able to do. We all learned, that computers are based on algorithms, that do complex calculations in very short times. We learned, that they are good in indexing and crawling databases, and that they are good in finding content. Search engines like Google grew enormously, because their computers and algorithms helped people to find information produced by other people (or by themselves). We all were trained to think and believe, that what search engines and databases produce as results, is mostly correct, sometimes wrong, but in all cases something, that is produced by humans.

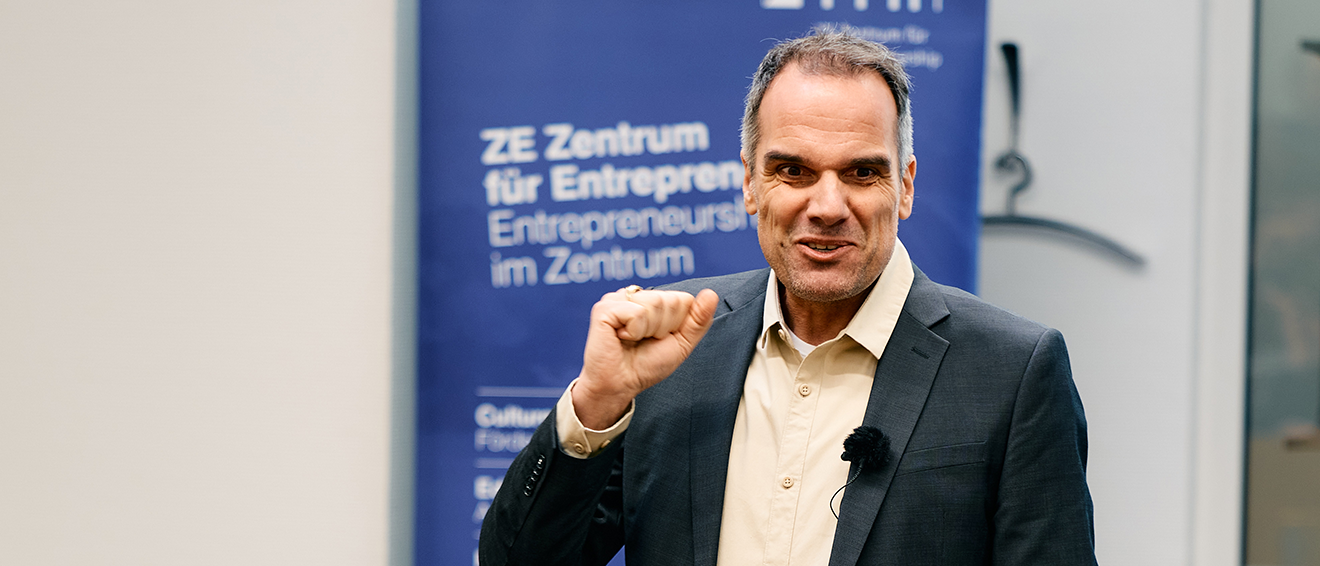

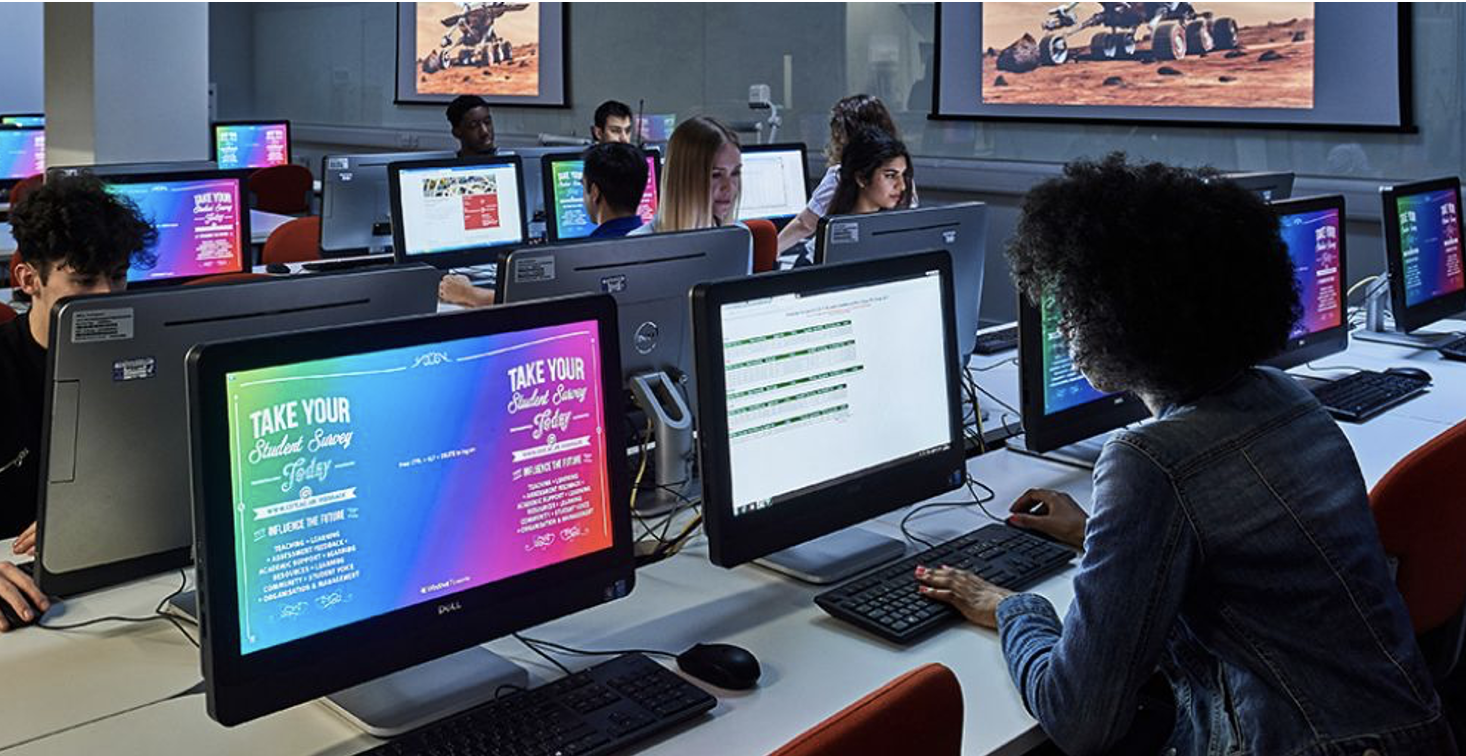

For example, when entering the search term „a picture of students learning computer science“, Google will produce this result (among others):

We can be sure, that this picture captures a real-world scene. All the elements on this picture are real. The people, the screens, keyboards, computers, etc. This is a result, we „found“, because the scene has actually happened and someone captured it with a camera.

If we enter the same „query“ (or we should better call it a prompt here) to Midjourney, we receive a similar result:

And here’s the big difference: nothing on this picture is real. None of the persons really exist, they never met in this room (which also doesn’t exist), none of these computers is real, it’s all a fake vision of something that could look like being „a picture of students learning computer science“. But it doesn’t capture anything that happened in the real world. It’s completely generated. It’s fake by nature.

In many cases, people don’t care, if the content is captured or generated. They want a nice looking picture of a red sports car for their living room wall – fine, here you go:

Perfect for the living room wall – but none of this has ever been built. And now the problem: we cannot distinguish anymore between captured and generated scenes. The quality of rendering and computer vision has reached dimensions, which are perfect. Neither people nor algorithms can say, what has been generated and what has been captured.

I selected images, because here we can easily make our minds and get an understanding of how GenAI works. But, it’s the same with other Multimedia types. Texts generated by ChatGPT are „generated“ – not captured, not „found“, not produced by „humans“. ChatGPT delivers an artificial text, which is most likely similar to texts we could find in the real world. But it is not real. Audio files sound human, but maybe are completely artificial. Videos generated by Sora (e.g.) look like captures of real world scenes, but they aren’t. And no one can distinguish.

We are living in a time, where no regulations are in place to indicate, what is generated content and what is real-world captured content. This means, that anything we see, read, hear or view in the digital world can be real or fake. We all need to be aware of this.

Therefore, a broad and public education in terms of such an awareness is required. Fake news can be produced easier than ever, it can be „proven“ by adding fake generated pictures and videos and even fake audio comments of the participants of such a fake scene.

GenAI is much faster than any fact checking or regulation. We need to know this.

It’s absolutely unclear, who’s having the ownership rights or the responsibilities of such generated content. What, if a GenAI used my picture as a training data? What if it generates pictures looking similar to me? What if GenAI uses a style of photographing or painting created by me? Who’s responsible? Whom can we trust? With a technology like this and our worldwide social media capabilities, information as such is in danger.

Finally, let’s consider our digital twins in the form of avatars, Facebook or Instagram accounts, etc. One thing, everyone has, is a profile picture. Yes, we know that Influencers and Social Media fans use some kind of filter to enhance their images. But now, GenAI can deliver completely fake and real-looking profiles, it can enhance images in a way that no one recognizes. Most of our digital twins look better than our real faces. We – as mankind – are drifting into a fake world of better looking selves. Movies like „Ready Player One“ predicted this years ago. But if we’re not aware, this will be our reality.

I strongly recommend and work on public education to achieve such an awareness and to prohibit the world from becoming a nice looking digital copy of a bad looking reality.